The original project from Robby Kraft was written in Objective C and uses OpenGL, and is unsupported now.

This project adapted the original code to Swift, then to Metal, and finally to only use the "simd" vector and matrix functions. The current code makes no reference to GLKit.

Virtually all aspects of this program are done and working. Really, what's need now is:

- documentation updates

- convert the meridian lines to use a real Metal filter instead of a canned image

I made more progress in June 2020, and finally got the panoramic and grid line images to show. It was just so painful to get there that I put the project aside for a while, hoping I'd get some comments or requests, but nope - nothing. Two stars for the port to Swift.

In any case, I really do want to finish this, and having some personal time before Christmas, I was able to get VRMode working. So really, except for a few small details, the Metal work is complete. What I do want to do is remove the grid line image, and replace it with a UIView into which one draws anything, and that becomes the basis of the overlay (the idea being you could put text, etc, and have it mapped onto the inside of the sphere just like the image.

Stage 1: The Panorama.m Objective C class was split into two classes, Panorama and Sphere. Both were onverted to Swift, and everything appears to work OK. Complete.

Stage2: Apple deprecated OpenGL, so switch from it to using Metal. Complete

Stage3: Replace GLKKit's Matrix operations with equivalents using SIMD. In Progress

Stage4: Provide a UIView (of the same dimensions as the base image) that replaces the grid image, and supports additional visual elements such as markers, text signes, and other spales. In progress.

This project could not have succeeded without the support (handholding) of several Metal experts:

- Mike Smithwick, for creating the Sphere mapping code from Touch Fighter

- Robby Kraft, who took the Sphere code and created the original Panorama viewer in Objective C using OpenGL

- Warren Moore, who provided numerous suggestings and "how-tos" as I slogged through the OpenGL -> Metal conversion

- Zac Flamig, who helped me with the Metal Shader

- Maxwell @mxswd, who provided Metal code to assist in the dynamic overlay

Note that the older Swift conversion that uses OpenGL is now in the "Swift-OpenGL" branch, and the older "Metal" branch has become "master".

-

passing the three matrices to the Metal Shader, and now I have the "normal" looking view!

-

blending the image and the lines implemented (you cannot believe how long this took!)

-

updated the mapping routine to use vectors as opposed to an array of floats

-

fixed a bug in how the triangles in Sphere were constructed (for some reason not visible...)

See Panorama-Prefix.pch for instructions on switching between ObjectiveC and Swift

- Metal powered

- orientation sensors to look around

- touch interactive

- pan to look around

- pinch to zoom

- a split screen mode for VR headsets

- helper functions to orient direction of camera and touches

Metal has strict texture size requirements

acceptable image sizes:

- 4096 × 2048

- 2048 × 1024

- 1024 × 512

- 512 × 256

- 256 × 128

- ... (any smaller power of 2)

-(void) setImage:(UIImage*)image

-(void) setImageWithName:(NSString*)fileName // path or bundle. will check at both // auto-update (usually only one of these at a time is recommended)

-(void) setOrientToDevice:(BOOL) // activate motion sensors

-(void) setTouchToPan:(BOOL) // activate UIPanGesture

// aligns z-axis (into screen)

-(void) orientToVector:(GLKVector3)

-(void) orientToAzimuth:(float) Altitude:(float)

// rotate cardinal north around the image horizon. in degrees

-(void) setCardinalOffset:(float)-(void) setFieldOfView:(float) // in degrees

-(void) setPinchToZoom:(BOOL) // activate UIPinchGesture-(void) setShowTouches:(BOOL) // overlay latitude longitude intersects

-(BOOL) touchInRect:(CGRect) // hotspot detection in world coordinates-(CGPoint) screenLocationFromVector:(GLKVector3) // 2D screen point from a 3D point

-(GLKVector3) vectorFromScreenLocation:(CGPoint) // 3D point from 2D screen point

-(CGPoint) imagePixelAtScreenLocation:(CGPoint) // 3D point from 2D screen point

// except this 3D point is expressed as 2D pixel unit in the panorama image-(void) setVRMode:(BOOL)This activates a split screen that works inside of VR headsets like Google Cardboard. TBD if more VR best practices are needed, such as a barrel shader.

- Illusion of varying depth is not available. The two screens are rendered using the same image with no difference between camera IPD.

copy PanoramaView.swift and Sphere.swift into your project

@interface ViewController (){

PanoramaView *panoramaView;

}

@end

@implementation ViewController

- (void)viewDidLoad{

[super viewDidLoad];

panoramaView = [[PanoramaView alloc] init];

[panoramaView setImageWithName:@"image.jpg"];

[self setView:panoramaView];

}

@endclass ViewController: MTKView {

let panoramaView:PanoramaView

required init?(coder aDecoder: NSCoder) {

panoramaView = PanoramaView()

super.init(coder: aDecoder)

}

override func viewDidLoad() {

super.viewDidLoad()

panoramaView.setImageWithName("image.jpg")

self.view = panoramaView

}

override func glkView(_ view: GLKView, drawIn rect: CGRect) {

panoramaView.draw()

}

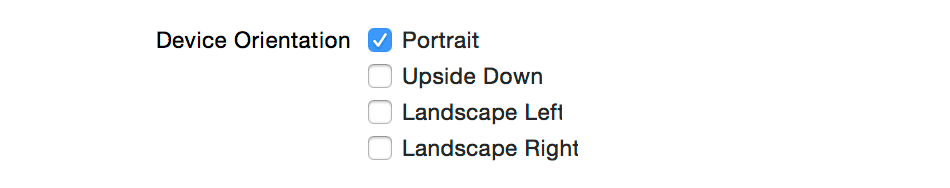

}- no device landscape/portrait auto-rotation

- any of the 4 device orientations works, use only 1.

- azimuth and altitude

- look direction, the Z vector pointing through the center of the screen

The program begins by facing the center column of the image, or azimuth 0°

equirectangular images mapped to the inside of a celestial sphere come out looking like the original scene, and the math is relatively simple http://en.wikipedia.org/wiki/Equirectangular_projection

MIT